OpenAI’s newest Artificial Intelligence model, GPT-4o, brings significant advancements to ChatGPT, improving its capabilities to understand images, text, and multiple languages more efficiently.

After the announcement of ChatGPT 4o, searches on Google on how to use this Artificial Intelligence model increased.

The rollout of this cutting-edge technology will occur gradually, ensuring that all users can access its powerful features seamlessly.

When will ChatGPT 4o be available?

The GPT-4o model will be available to all ChatGPT users, including those using the free version. OpenAI has designed this implementation to ensure that every user receives an optimized and stable experience.

As the model becomes available in your account, you will receive a notification directly within the platform, letting you know that you can start using GPT-4o.

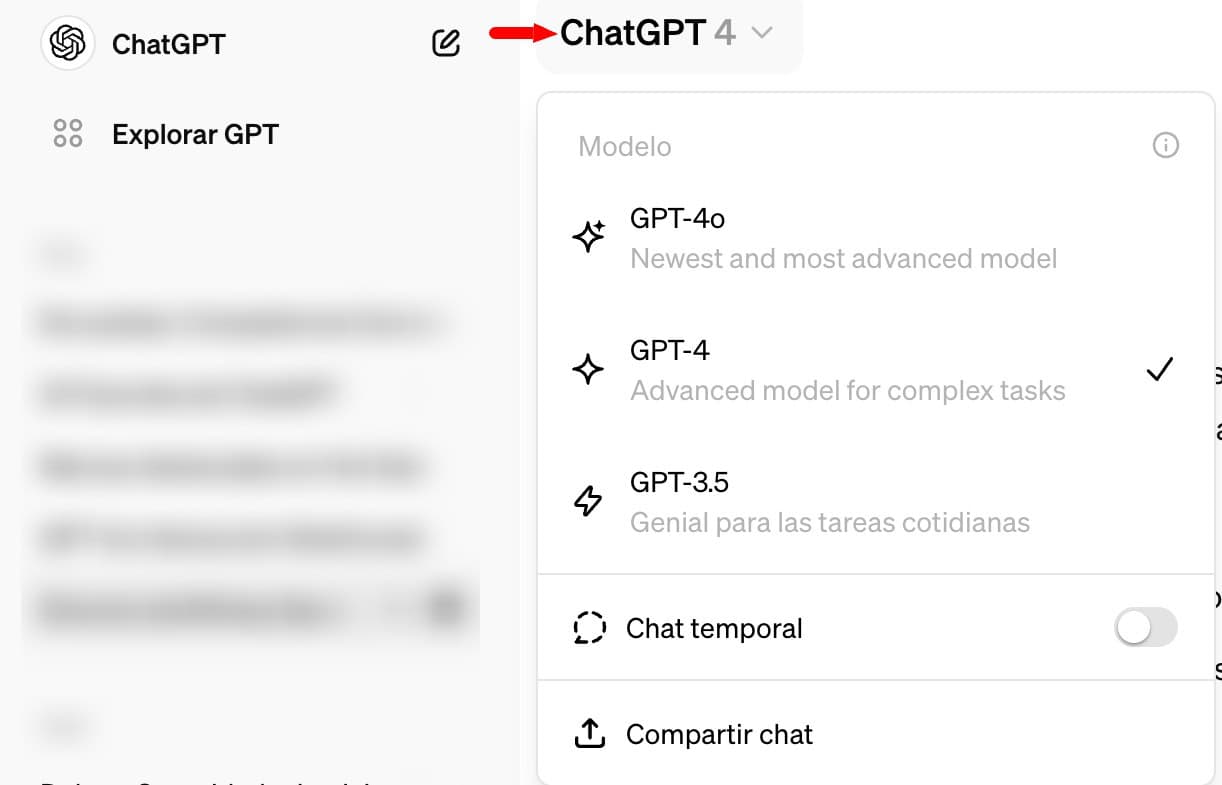

Accessing GPT-4o

Once GPT-4o is available to you, accessing it is simple. You will find it in the top menu of the ChatGPT interface. From there, you can select GPT-4o and start experiencing its enhanced capabilities, including more natural interactions and improved response times.

ChatGPT. What’s new with GPT-4o?

Among the prominent new features, GPT-4o offers instant translation. Users can ask the model to translate conversations in real-time into different languages, such as from Italian to Spanish, facilitating communication between speakers of different languages.

Additionally, this model can analyze images. Users can show it a photo or screenshot and obtain detailed information about it, from identifying car models to detecting errors in programming codes.

- Voice interaction: Users will be able to converse with ChatGPT using only their voice, receiving audio responses in a human-like response time.

- Image and video analysis: GPT-4o is capable of processing and maintaining conversations about visual content shared by users.

- Free for everyone: Unlike GPT-4, this new version will be available for free to all users, both on mobile devices and computers.

- New applications: OpenAI has launched a new specific application for Mac and PC, in addition to the existing ones for iOS and Android.

How does GPT-4o work?

GPT-4o maintains the basic functions of ChatGPT, responding to user questions and requests, but now also through voice. During the presentation, it was demonstrated how the AI can tell stories, adapt to user requests, and even change its tone of voice.

What does multimodality imply?

Multimodality allows users to interact with ChatGPT in a more natural and versatile way, whether through text, voice, or images. This opens up a range of possibilities for its use in different areas, from education to entertainment.

GPT-4o is expected to help keep ChatGPT at the forefront of the chatbot market, boosting its growth and usage. Additionally, there are rumors that OpenAI might be negotiating with Apple to integrate this technology into Siri, the voice assistant for iPhones.

ALSO READ. When will Sora OpenAI be available? What we already know about its release date

Why is it called GPT-4o?

Through its website, OpenAI indicates that the ‘o’ stands for Omni. In this regard, the leading company in Artificial Intelligence states:

GPT-4o (“o” for “omni”) is a step towards much more natural human-computer interaction: it accepts as input any combination of text, audio, and image, and generates any combination of text, audio, and image outputs.

It can respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, which is similar to human response time (opens in a new window) in a conversation.

It matches GPT-4 Turbo performance on text in English and code, with significant improvement on text in non-English languages, while also being much faster and 50% cheaper in the API. GPT-4o is especially better at understanding vision and audio compared to existing models.

⇒ SUSCRÍBETE A NUESTROS CONTENIDOS EN GOOGLE NEWS